Google has a “Places” API…and a “NEW Places” API just to make things more confusing.

The Places API is all of the stuff you see in Google Maps.

If you wanted to do something fun, like contact all the Dentists in your area to sell them the Ultra Gum Scraper 2025 (now causing 25% more bleeding), you could search for all of the dentists in your area on Google Maps, and hell, even expand to the state and all of the US, maybe even the world.

Contacting all of them is probably going to require something like a spreadsheet though to keep track of who answers, who’s interested, and who has threatened you, personally, with legal action to never call them again.

You could probably copy all of this data by hand from Google Maps to a spreadsheet in Excel or Google’s own Sheets product, but we could probably build something like this faster in a more automated way.

Enter Google’s Places API. Oh, I already mentioned that? Did I mention the NEW Places API?

A lot of Google’s API stuff isn’t free anymore, so you may need to set up billing. But fortunately, the billing is generally pretty reasonable. More towards cents than dollars for tasks like this unless you’re generating thousands upon thousands of requests, at which point I’d like to think you’re offsetting this with some kind of profit.

I’d painstakingly detail how to set up the Google Places and New Places API via a Google Cloud account if you don’t already have one, but luckily for us both Google has already detailed this here:

Set Up Your Google Cloud Project for Google Maps/Places

This will walk you through setting up your Google Cloud project and getting an API key, which is really all you’ll need to get started.

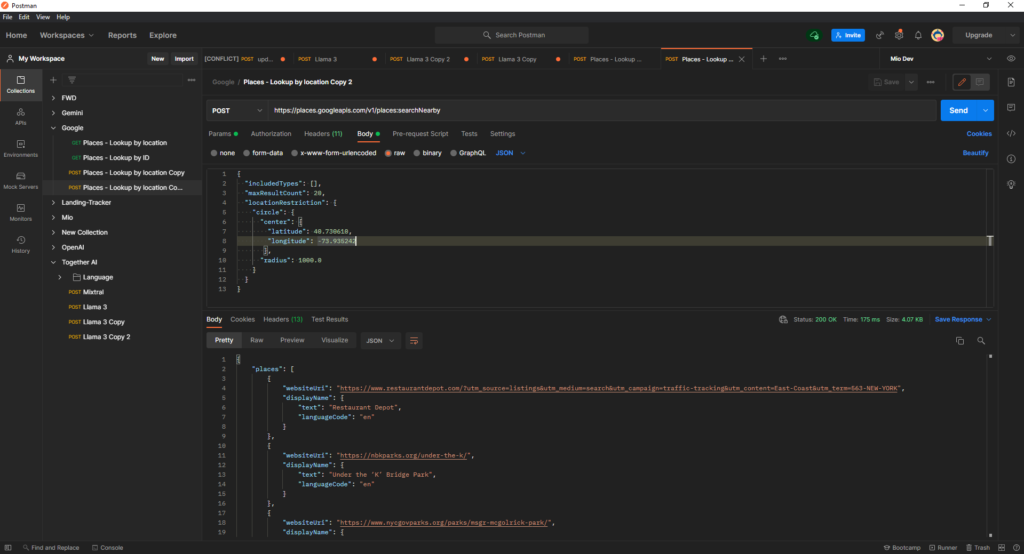

Whenever working with a REST API my first step is to start using Postman, Insomnia or the like to start testing my REST queries and experimenting with how the API works.

I’m currently looking for businesses in my area. The new places API handles this with a POST call to this URL (new API, btw, I recommend the new API, because the old API requires two calls, one to look up the IDs by a search and one to look up the additional data by ID)

https://places.googleapis.com/v1/places:searchNearbyWith a JSON Payload like this:

{

"includedTypes": [],

"maxResultCount": 20,

"locationRestriction": {

"circle": {

"center": {

"latitude": 40.730610,

"longitude": -73.935242

},

"radius": 1000.0

}

}

}You also need to make sure you set some custom headers, your typical Content-Type: application/json but also:

X-Goog-Api-Key -> Your API KEYAND

X-Goog-FieldMask -> places.displayName,places.websiteUri Which are the fields you’ll want to return

On the previously linked Google setup page the fields you can return are specified in the documentation.

Something you’ll note here is that you have to provide a latitude, longitude and a radius in order to find things in your proximity, and what’s more you’ll notice the maximum results here. Twenty is actually the most you can request at any given time. With these awkward restrictions you’re nearly in the same boat as manually searching.

But that’s where a programming language comes in, such as Node, and by Node I mean Python because Node’s Await/Async/Promise behavior is always a nightmare to get right and I don’t have all fucking day.

And here’s what that script ended up looking like:

import http.client

import json

import csv

# Please put your API key below or it won't work

api_key = 'AIpUtY0uR4pIK3yheREmOthaF3R'

lat = 40.730610

lng = -73.935242

places_by_id = {}

for i in range (0, 20):

conn = http.client.HTTPSConnection("places.googleapis.com")

payload = json.dumps({

"includedTypes": [],

"maxResultCount": 20,

"locationRestriction": {

"circle": {

"center": {

"latitude": lat,

"longitude": lng

},

"radius": 1000

}

}

})

headers = {

'X-Goog-Api-Key': api_key,

'X-Goog-FieldMask': 'places.displayName,places.websiteUri,places.id,places.googleMapsUri',

'Content-Type': 'application/json'

}

conn.request("POST", "/v1/places:searchNearby", payload, headers)

res = conn.getresponse()

data = res.read()

parsed_data = json.loads(data.decode("utf-8"))

places = parsed_data.get('places')

if places:

for place in parsed_data.get('places'):

places_by_id[place['id']] = {

'id': place['id'],

'googleMaps': place['googleMapsUri'],

'text': place['displayName']['text'],

'website': place.get('websiteUri'),

}

lat = lat + 0.01

for place in places_by_id:

print(places_by_id[place])

with open('places.csv', 'w', newline='') as csvfile:

fieldnames = ['id', 'googleMaps', 'text', 'website']

writer = csv.DictWriter(csvfile, fieldnames=fieldnames)

writer.writeheader()

for place in places_by_id:

writer.writerow(places_by_id[place])This script does a couple of key things.

- It does all of the stuff the Postman script did, but it does it 20 times all while slowly increasing the latitude by 0.01 each iteration. This allows us to move the range. The problem is that there will potentially be duplicate businesses because the lat/long ranges don’t neatly map to a number of meters. Or maybe they do, but what am I gonna do, look up a formula? I’m a programmer, not a mathematician, Jim.

- So, I have a Python dictionary where each key is the unique google ID for the business. This allows cleanly storing duplicates in one master data structure without having redundant data.

- Then ultimately I save that data into a CSV.

You may also note I’ve added some fields like the ID and the Google Maps original link. My next step will be to create an algorithm that encircles your starting point, instead of moving in one simple direction, slowly expanding the proximity from where it starts.

This can also be modified to return any number of fields, and filter on additional searched types of businesses, but I wanted to put something very basic here for starters.

You can always Email Me or Tweet Me if you want to implement something like this or to do something more advanced or get stuck. I’m always available to help.

My next updates will probably be around updating this script or writing a simple tool that goes through websites and extracts email addresses. Web scraping is always a fun past-time.

[…] this is just an update to the previous script. Rather than advancing in a straight line, x and y range loops have been added to get everything in […]